Wireguard over GRETAP over Wireguard?

The purpose

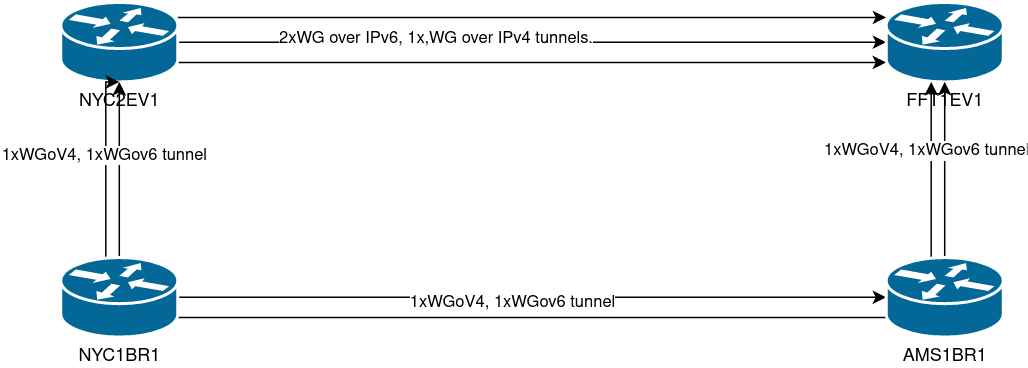

So… Recently I have been faced with some instability on my wireguard tunnels from NYC1BR1, to the rest of AS203528.

I also have a couple of VMs, “NYC2EV1” and “FFT1EV1” on Linode, part of what was my legacy network where I learned the IPv6 basics (they give out IPv6 /56 upon request).

This network is connected to my internal network by using GRETAP tunnels over AS203528. However both Linodes still have tunnels between each other.

1

2

3

4

fabrizzio@NYC2EV1:~$ sh interfaces | match FFT1

wg4 172.30.238.2/30 u/u To FFT1EV1 v4/v6 ***IPv6 Inter-Region***

wg5 172.30.238.6/30 u/u To FFT1EV1 v4/v6 ***IPv6 Inter-Region***

wg6 172.30.238.10/30 u/u From FFT1EV1 v4/v6 ***IPv6 Inter-Region***

I have noticed that whatever issue BuyVM might have, does not affect the connection towards Linode (between NYC1BR1 and NYC2EV1)… so that gives me the idea to build tunnels…

Dealing with fragmentation and TTL Inheritance

AS203528 is built taking into consideration the tunnels between the routers will have a minimum MTU of 1420 (Wireguard). If I want to build a tunnel with MTU 1420 on the inside, over a path with MTU 1420 then something must fragment.

If you want to allow fragmentation of your GRE tunnel, for some reason you must enable ttl inheritance (ttl 0) whenever you set up no pmtu discovery/ ignore df flags. (I am not entirely sure why)

This causes the weird effect seen in the trace below, the tunnel is not completely transparent, you will not get packets sent inside the tunnel with TTL=1 all the way to the other end.

(snippet from a tunnel I use to remotely manage an LTE modem)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

fabrizzio@OSR1CR4# show interfaces tunnel tun4

description "OSR1E2 vtnet6 - VLN1 LTE WAN MGMT"

encapsulation gretap

mtu 1500

parameters {

ip {

ignore-df

key 401

no-pmtu-discovery

ttl 0

}

}

remote 192.168.255.20

source-address 192.168.254.13

traceroute to 192.168.51.254 (192.168.51.254), 30 hops max, 60 byte packets

1 _gateway (192.168.20.1) 0.485 ms 0.432 ms 0.418 ms

2 172.27.16.73 (172.27.16.73) 0.655 ms 0.633 ms 0.612 ms

3 172.27.16.17 (172.27.16.17) 0.998 ms 0.978 ms 0.959 ms

4 172.27.18.66 (172.27.18.66) 1.143 ms 1.178 ms 1.166 ms

5 * * *

6 * * *

7 * * *

8 * * *

9 192.168.51.254 (192.168.51.254) 251.629 ms 201.757 ms 201.726 ms

This will be somewhat of a pain if you want to bring up any routing protocols over this type of tunnels.

So what can I do?. Well, when there is a will, there is a way.

First attempt

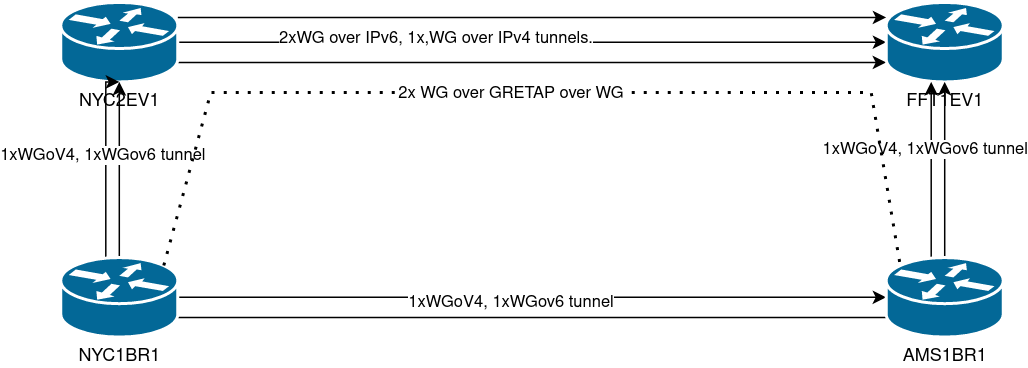

I will build two GRETAP tunnels, that allow for fragmentation, between NYC1BR1 and AMS1BR1 (through NYC2EV1 and FFT1EV1, static routes created on both of them to make sure recursive routing over AS203528 is impossible).

Then I will build GRE tunnels on top of those GRETAP tunnels. So the outer GRETAP tunnel takes care of fragmentation. And the inner GRE tunnel gives me the untouched TTL with the 1420 MTU I want.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

fabrizzio@NYC1BR1# sh interfaces tunnel tun10

address 172.27.0.77/30

description "Wrapper GRE NYC1BR1 (NYC2EV2-FFT1EV1) AMS1BR1 v4"

encapsulation gretap

mtu 1500

parameters {

ip {

ignore-df

key 400

no-pmtu-discovery

ttl 0

}

}

remote 172.27.255.5

source-address 172.27.255.1

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

fabrizzio@NYC1BR1# run ping 172.27.0.78 size 1472 do-not-fragment

PING 172.27.0.78 (172.27.0.78) 1472(1500) bytes of data.

1480 bytes from 172.27.0.78: icmp_seq=1 ttl=64 time=89.4 ms

1480 bytes from 172.27.0.78: icmp_seq=2 ttl=64 time=91.8 ms

1480 bytes from 172.27.0.78: icmp_seq=3 ttl=64 time=89.2 ms

^C

--- 172.27.0.78 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2004ms

rtt min/avg/max/mdev = 89.184/90.133/91.802/1.183 ms

[edit]

fabrizzio@NYC1BR1# run traceroute 172.27.0.78

traceroute to 172.27.0.78 (172.27.0.78), 30 hops max, 60 byte packets

1 * * *

2 * * *

3 172.27.0.78 (172.27.0.78) 88.645 ms 88.651 ms 88.634 ms

Good enough for now! Inner tunnel will take care of the TTL issue :D

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

fabrizzio@NYC1BR1# show interfaces tunnel tun11

address 172.27.0.81/30

description "To AMS1BR1 via tun10 wrapper"

encapsulation gre

ip {

adjust-mss 1372

}

mtu 1420

parameters {

ip {

key 401

}

}

remote 172.27.0.78

source-address 172.27.0.77

The results

It doesn’t work. Somehow the inner TTL value gets inherited anyways by the outer GRE tunnel. the packet capture below is from a traceroute, captured at NYC2EV1. First trace probes have TTL=1, the inner tunnel has TTL=255, outer tunnel is somehow using TTL=1.

1

2

3

4

5

6

7

8

9

10

11

15:19:01.853902 ip: (tos 0x0, ttl 1, id 46455, offset 0, flags [none], proto GRE (47), length 144)

172.27.255.1 > 172.27.255.5: GREv0, Flags [key present], key=0x190, proto TEB (0x6558), length 124

2e:62:e6:af:07:36 > 6e:7a:1a:84:7a:5d, ethertype IPv4 (0x0800), length 116: (tos 0x0, ttl 255, id 64589, offset 0, flags [DF], proto GRE (47), length 102)

172.27.0.77 > 172.27.0.78: GREv0, Flags [key present], key=0x191, proto TEB (0x6558), length 82

9e:71:16:29:bd:df > 96:10:1d:7a:3c:52, ethertype IPv4 (0x0800), length 74: (tos 0x0, ttl 1, id 7341, offset 0, flags [none], proto UDP (17), length 60)

fabrizzio@NYC1BR1:~$ traceroute 172.27.0.82

traceroute to 172.27.0.82 (172.27.0.82), 30 hops max, 60 byte packets

1 * * *

2 * * *

p 3 172.27.0.82 (172.27.0.82) 88.887 ms * 88.966 ms

I tried switching the inner tunnel to GRETAP, making the inner GRETAP tunnel a member of a bridge, adding “ttl 255” on the inner tunnel, no luck really in avoiding this TTL inheritance I don’t want.

2nd attempt

Then an idea came to mind :D I should just run Wireguard on top of the outer GRE tunnel, maybe that way it will work and Linux kernel will be unaware of the nasty stuff I am doing.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

tun10 172.27.0.77/30 u/u Wrapper GRE NYC1BR1 (NYC2EV2-FFT1EV1) AMS1BR1 v4

wg10 172.27.0.81/30 u/u

fabrizzio@NYC1BR1# show interfaces wireguard wg10

address 172.27.0.81/30

peer AMS1BR1 {

allowed-ips 0.0.0.0/0

allowed-ips 172.27.0.80/30

persistent-keepalive 15

preshared-key xxxxxxxxxxxx

public-key yyyyyyyyyyyyyyy

}

port 4001

private-key zzzzzzzzzz

fabrizzio@NYC1BR1:~$ ping 172.27.0.82 size 1392 do-not-fragment count 5 ada

PING 172.27.0.82 (172.27.0.82) 1392(1420) bytes of data.

1400 bytes from 172.27.0.82: icmp_seq=1 ttl=64 time=89.7 ms

1400 bytes from 172.27.0.82: icmp_seq=2 ttl=64 time=89.8 ms

1400 bytes from 172.27.0.82: icmp_seq=3 ttl=64 time=89.7 ms

1400 bytes from 172.27.0.82: icmp_seq=4 ttl=64 time=88.2 ms

1400 bytes from 172.27.0.82: icmp_seq=5 ttl=64 time=88.9 ms

--- 172.27.0.82 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 360ms

rtt min/avg/max/mdev = 88.234/89.266/89.819/0.614 ms, ipg/ewma 90.057/89.442 ms

fabrizzio@NYC1BR1:~$ traceroute 172.27.0.82

traceroute to 172.27.0.82 (172.27.0.82), 30 hops max, 60 byte packets

1 172.27.0.82 (172.27.0.82) 88.648 ms 88.607 ms 88.590 ms

It works!

OSPF also establishes an adjacency just fine.

1

2

3

4

5

6

7

8

9

fabrizzio@NYC1BR1# show protocols ospf interface wg10

area 0

bfd {

}

cost 8510

network point-to-point

fabrizzio@NYC1BR1:~$ sh ip ospf nei | match wg10

192.168.248.10 1 Full/- 58.151s 36.226s 172.27.0.82 wg10:172.27.0.81 0 0 0

Performance

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

iperf3: the client has terminated

-----------------------------------------------------------

Server listening on 5201

-----------------------------------------------------------

Accepted connection from 172.27.0.81, port 41141

[ 5] local 172.27.0.82 port 5201 connected to 172.27.0.81 port 40899

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-1.00 sec 5.19 MBytes 43.6 Mbits/sec 0.070 ms 0/3980 (0%)

[ 5] 1.00-2.00 sec 5.95 MBytes 49.9 Mbits/sec 0.083 ms 2/4564 (0.044%)

[ 5] 2.00-3.00 sec 5.96 MBytes 50.0 Mbits/sec 0.088 ms 0/4570 (0%)

[ 5] 3.00-4.00 sec 5.20 MBytes 43.5 Mbits/sec 4.730 ms 9/3994 (0.23%)

[ 5] 4.00-5.00 sec 6.41 MBytes 53.9 Mbits/sec 0.112 ms 237/5147 (4.6%)

[ 5] 5.00-6.00 sec 5.96 MBytes 50.0 Mbits/sec 0.105 ms 4/4569 (0.088%)

[ 5] 6.00-7.00 sec 5.95 MBytes 49.9 Mbits/sec 0.061 ms 1/4559 (0.022%)

[ 5] 7.00-8.00 sec 5.96 MBytes 50.0 Mbits/sec 0.105 ms 5/4575 (0.11%)

[ 5] 8.00-9.00 sec 5.88 MBytes 49.3 Mbits/sec 0.132 ms 0/4509 (0%)

[ 5] 9.00-10.00 sec 6.03 MBytes 50.6 Mbits/sec 0.117 ms 7/4627 (0.15%)

[ 5] 10.00-10.13 sec 788 KBytes 50.2 Mbits/sec 0.070 ms 0/590 (0%)

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[SUM] 0.0-10.1 sec 51 datagrams received out-of-order

[ 5] 0.00-10.13 sec 59.3 MBytes 49.1 Mbits/sec 0.070 ms 265/45684 (0.58%) receiver

-----------------------------------------------------------

Server listening on 5201

-----------------------------------------------------------

Accepted connection from 172.27.0.81, port 38943

[ 5] local 172.27.0.82 port 5201 connected to 172.27.0.81 port 51093

[ ID] Interval Transfer Bitrate Total Datagrams

[ 5] 0.00-1.00 sec 5.96 MBytes 50.0 Mbits/sec 4567

[ 5] 1.00-2.00 sec 5.96 MBytes 50.0 Mbits/sec 4569

[ 5] 2.00-3.00 sec 5.96 MBytes 50.0 Mbits/sec 4567

[ 5] 3.00-4.00 sec 5.96 MBytes 50.0 Mbits/sec 4569

[ 5] 4.00-5.00 sec 5.96 MBytes 50.0 Mbits/sec 4569

[ 5] 5.00-6.00 sec 5.96 MBytes 50.0 Mbits/sec 4570

[ 5] 6.00-7.00 sec 5.96 MBytes 50.0 Mbits/sec 4567

[ 5] 7.00-8.00 sec 5.96 MBytes 50.0 Mbits/sec 4568

[ 5] 8.00-9.00 sec 5.96 MBytes 50.0 Mbits/sec 4569

[ 5] 9.00-10.00 sec 5.96 MBytes 50.0 Mbits/sec 4569

[ 5] 10.00-10.21 sec 1.23 MBytes 50.1 Mbits/sec 941

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.21 sec 60.8 MBytes 50.0 Mbits/sec 0.000 ms 0/46625 (0%) sender

-----------------------------------------------------------

Server listening on 5201

fabrizzio@NYC1BR1:~$ iperf3 -c 172.27.0.82 -B 172.27.0.81 -u -b50M

Connecting to host 172.27.0.82, port 5201

[ 5] local 172.27.0.81 port 40899 connected to 172.27.0.82 port 5201

[ ID] Interval Transfer Bitrate Total Datagrams

[ 5] 0.00-1.00 sec 5.96 MBytes 50.0 Mbits/sec 4565

[ 5] 1.00-2.00 sec 5.96 MBytes 50.0 Mbits/sec 4569

[ 5] 2.00-3.00 sec 5.96 MBytes 50.0 Mbits/sec 4569

[ 5] 3.00-4.00 sec 5.96 MBytes 50.0 Mbits/sec 4568

[ 5] 4.00-5.00 sec 5.96 MBytes 50.0 Mbits/sec 4569

[ 5] 5.00-6.00 sec 5.96 MBytes 50.0 Mbits/sec 4569

[ 5] 6.00-7.00 sec 5.96 MBytes 50.0 Mbits/sec 4569

[ 5] 7.00-8.00 sec 5.96 MBytes 50.0 Mbits/sec 4572

[ 5] 8.00-9.00 sec 5.96 MBytes 50.0 Mbits/sec 4565

[ 5] 9.00-10.00 sec 5.96 MBytes 50.0 Mbits/sec 4569

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.00 sec 59.6 MBytes 50.0 Mbits/sec 0.000 ms 0/45684 (0%) sender

[ 5] 0.00-10.13 sec 59.3 MBytes 49.1 Mbits/sec 0.070 ms 265/45684 (0.58%) receiver

iperf Done.

fabrizzio@NYC1BR1:~$ iperf3 -c 172.27.0.82 -B 172.27.0.81 -u -b50M -R

Connecting to host 172.27.0.82, port 5201

Reverse mode, remote host 172.27.0.82 is sending

[ 5] local 172.27.0.81 port 51093 connected to 172.27.0.82 port 5201

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-1.00 sec 6.09 MBytes 51.1 Mbits/sec 0.210 ms 433/5100 (8.5%)

[ 5] 1.00-2.00 sec 5.93 MBytes 49.7 Mbits/sec 0.100 ms 24/4569 (0.53%)

[ 5] 2.00-3.00 sec 5.87 MBytes 49.2 Mbits/sec 0.147 ms 54/4551 (1.2%)

[ 5] 3.00-4.00 sec 5.94 MBytes 49.9 Mbits/sec 0.230 ms 28/4583 (0.61%)

[ 5] 4.00-5.00 sec 5.94 MBytes 49.8 Mbits/sec 0.243 ms 22/4573 (0.48%)

[ 5] 5.00-6.00 sec 5.96 MBytes 50.0 Mbits/sec 0.184 ms 2/4572 (0.044%)

[ 5] 6.00-7.00 sec 5.93 MBytes 49.7 Mbits/sec 0.117 ms 26/4569 (0.57%)

[ 5] 7.00-8.00 sec 5.90 MBytes 49.5 Mbits/sec 0.136 ms 43/4569 (0.94%)

[ 5] 8.00-9.00 sec 5.86 MBytes 49.2 Mbits/sec 0.086 ms 73/4568 (1.6%)

[ 5] 9.00-10.00 sec 5.86 MBytes 49.1 Mbits/sec 0.167 ms 76/4565 (1.7%)

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-10.21 sec 60.8 MBytes 50.0 Mbits/sec 0.000 ms 0/46625 (0%) sender

[ 5] 0.00-10.00 sec 59.3 MBytes 49.7 Mbits/sec 0.167 ms 781/46219 (1.7%) receiver

I think the performance is more than enough for this abomination. Most I could get from TCP testing was ~110 Mbit.