VyOS 1.5, Segment Routing & GRE

I was reading about Segment Routing, and I wanted to give that technology a try.

I already had a GNS3 lab built with vSRX devices, with a basic IS-IS+LDP topology, running iBGP between PE routers and a L3VPN service. Unfortunately after trying to enable SR on the vSRX devices I found that you cannot configure SRGB on them. I’d have to re-do my lab with vMX and that’s not fun for me, it takes it out of “weekend fun project” territory and more into “homework”.

So I thought, why not deploy Segment Routing on my own network?. Not directly on AS203528 yet (I don’t want to mess with it due to a current outage I have), but on my internal network. It all runs VyOS, IGP IS-IS and iBGP (with 4 RRs). There’s no MPLS deployed there yet. After all this network is supposed to be a Lab (even though it has taken more of a “production” role).

Initial deployment

First of all I updated all the nodes (except RRs) on my Internal VyOS network to the latest 1.5 nightly at the time (1.5-rolling-202312290919). Easy enough. All good so far.

Then it was just enabling SR within IS-IS (It already has default SRGB/SRLB values) and assigning on each router the index values for the loopback prefixes. And also enabling MPLS on the internally-facing interfaces.

Here is an example, first are the loopback IPs of one of my routers, and then the deployed config to enable SR.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

dum0 192.168.254.34/32 xx:xx:xx:xx:xx:xx default 1500 u/u Loopback / Tunnel source

2a0e:8f02:21d2:ffff::34/128

dum1 fc0e:8f02:21d2:ffff::34/128 xx:xx:xx:xx:xx:xx default 1500 u/u IPv6 iBGP next hop

set protocols isis segment-routing maximum-label-depth '15'

set protocols isis segment-routing prefix 2a0e:8f02:21d1:ffff::34/128 index value '341'

set protocols isis segment-routing prefix 192.168.254.34/32 index value '340'

set protocols isis segment-routing prefix fc0e:8f02:21d1:ffff::34/128 index value '342'

set protocols mpls interface 'eth0'

set protocols mpls interface 'eth1'

After deploying that everywhere - it just worked:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

OSR2A1:~$ sh ip route 192.168.254.13

Routing entry for 192.168.254.13/32

Known via "isis", distance 115, metric 45210, best

Last update 1d00h32m ago

* 172.27.19.17, via eth1, label 16130, weight 1

OSR2A1:~$ sh ipv6 route 2a0e:8f02:21d1:ffff::13

Routing entry for 2a0e:8f02:21d1:ffff::13/128

Known via "isis", distance 115, metric 45210, best

Last update 1d00h33m ago

* fe80::9434:bdff:fe26:3f79, via eth1, label 16131, weight 1

OSR2A1:~$ sh ipv6 route fc0e:8f02:21d1:ffff::13

Routing entry for fc0e:8f02:21d1:ffff::13/128

Known via "isis", distance 115, metric 45210, best

Last update 1d00h33m ago

* fe80::9434:bdff:fe26:3f79, via eth1, label 16132, weight 1

Problems

It all worked nice in the beginning, or so I thought. Later on the day I did find that there was some really sporadic performance degradation happening on my network, to/from the IPv6 Internet.

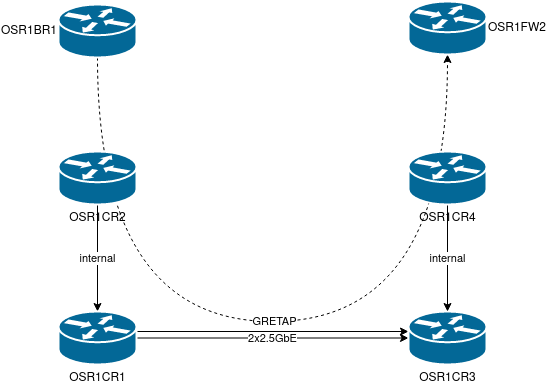

My internal network is connected to the Public v6 Internet through a redundant set of firewalls (OSR1FW1/OSR1FW2), both of these are on different servers at home.

Each of these firewalls is connected to two Border routers I have at home, OSR1BR1/OSR1BR2. The connection between Firewall & Border Router on the same server is through a local VLAN. However the connection to the FW/BR on the different server is through a GRETAP tunnel traversing my core.

The active firewall will do ECMP to/from both of the border routers. So that would partly explain the inconsistent experience and degradation.

Below is the example configuration of such a GRETAP tunnel across my core, for the Firewall to BR connection: (OSR1CR4 side looks pretty much the same)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

OSR1CR2# sh interfaces ethernet eth12

description "OSR1FW2 - OSR1BR1 VLAN 543"

offload {

gro

gso

sg

tso

}

OSR1CR2# sh interfaces bridge br2

description "OSR1FW2 - OSR1BR1 VLAN 543"

enable-vlan

ipv6 {

address {

no-default-link-local

}

}

member {

interface eth12 {

allowed-vlan 100

native-vlan 100

}

interface tun2 {

allowed-vlan 100

native-vlan 100

}

}

OSR1CR2# sh interfaces tunnel tun2

description "OSR1FW2 - OSR1BR1 VLAN 543"

encapsulation gretap

mtu 1600

parameters {

ip {

key 543

}

}

remote 192.168.254.13

source-address 192.168.254.11

I noticed that, on the core router where the tunnel exists, whenever the performance issue was seen, the core-facing interface (in this case OSR1CR2 to OSR1CR1) would drop packets on TX.:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

fabrizzio@OSR1CR2:~$ sh interfaces ethernet eth0

eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1800 qdisc mq state UP group default qlen 1000

link/ether f6:65:87:96:70:22 brd ff:ff:ff:ff:ff:ff

altname enp0s18

altname ens18

inet 172.27.16.38/30 brd 172.27.16.39 scope global eth0

valid_lft forever preferred_lft forever

inet6 2a0e:8f02:21d1:feed:0:1:10:12/126 scope global

valid_lft forever preferred_lft forever

inet6 fe80::f465:87ff:fe96:7022/64 scope link

valid_lft forever preferred_lft forever

Description: To OSR1CR1

RX: bytes packets errors dropped overrun mcast

1261617086 3428022 0 20 0 0

TX: bytes packets errors dropped carrier collisions

5598511155 6117186 0 5212 0 0 <<<<<<<<<<<<<<<

fabrizzio@OSR1CR2:~$ sh ip route 192.168.254.13

Routing entry for 192.168.254.13/32

Known via "isis", distance 115, metric 1210, best

Last update 14:42:50 ago

* 172.27.16.37, via eth0, label 16130, weight 1

Here is an IPerf3 test running OSR1FW2 <> OSR1BR1, to test the performance across the GRETAP tunnel. The results are pretty bad, this should be close to 2 Gbit/s. Tons of retransmissions and SACK’s were seen. The tcpdump was taken at OSR1CR4 interface (facing OSR1FW2) and it’s visible that the traffic enters OSR1CR4 however the far end is missing some segments.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

OSR1FW2:~$ iperf3 -c 172.27.1.17

Connecting to host 172.27.1.17, port 5201

[ 5] local 172.27.1.18 port 39192 connected to 172.27.1.17 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 139 KBytes 1.13 Mbits/sec 43 8.48 KBytes

[ 5] 1.00-2.00 sec 45.2 KBytes 371 Kbits/sec 40 8.48 KBytes

[ 5] 2.00-3.00 sec 0.00 Bytes 0.00 bits/sec 38 8.48 KBytes

^C- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-3.09 sec 184 KBytes 487 Kbits/sec 121 sender

[ 5] 0.00-3.09 sec 0.00 Bytes 0.00 bits/sec receiver

iperf3: interrupt - the client has terminated

16:39:41.862143 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [S], seq 1766734757, win 64240, options [mss 1460,sackOK,TS val 2424729071 ecr 0,nop,wscale 7], length 0

16:39:41.862850 IP 172.27.1.17.5201 > 172.27.1.18.39192: Flags [S.], seq 3455293594, ack 1766734758, win 65160, options [mss 1460,sackOK,TS val 4290898765 ecr 2424729071,nop,wscale 7], length 0

16:39:41.863012 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [.], ack 1, win 502, options [nop,nop,TS val 2424729072 ecr 4290898765], length 0

16:39:41.863051 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [P.], seq 1:38, ack 1, win 502, options [nop,nop,TS val 2424729072 ecr 4290898765], length 37

16:39:41.863749 IP 172.27.1.17.5201 > 172.27.1.18.39192: Flags [.], ack 38, win 509, options [nop,nop,TS val 4290898766 ecr 2424729072], length 0

16:39:41.864481 IP 172.27.1.17.5201 > 172.27.1.18.39180: Flags [P.], seq 3:4, ack 166, win 508, options [nop,nop,TS val 4290898766 ecr 2424729070], length 1

16:39:41.864492 IP 172.27.1.17.5201 > 172.27.1.18.39180: Flags [P.], seq 4:5, ack 166, win 508, options [nop,nop,TS val 4290898766 ecr 2424729070], length 1

16:39:41.864616 IP 172.27.1.18.39180 > 172.27.1.17.5201: Flags [.], ack 5, win 502, options [nop,nop,TS val 2424729073 ecr 4290898764], length 0

16:39:41.864658 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [P.], seq 38:7278, ack 1, win 502, options [nop,nop,TS val 2424729073 ecr 4290898766], length 7240

16:39:41.864700 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [P.], seq 7278:14518, ack 1, win 502, options [nop,nop,TS val 2424729073 ecr 4290898766], length 7240

16:39:41.864773 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [.], seq 14518:15966, ack 1, win 502, options [nop,nop,TS val 2424729074 ecr 4290898766], length 1448

16:39:41.865324 IP 172.27.1.17.5201 > 172.27.1.18.39192: Flags [.], ack 38, win 509, options [nop,nop,TS val 4290898767 ecr 2424729072,nop,nop,sack 1 {14518:15966}], length 0

16:39:41.865437 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [.], seq 38:1486, ack 1, win 502, options [nop,nop,TS val 2424729074 ecr 4290898767], length 1448

16:39:41.866101 IP 172.27.1.17.5201 > 172.27.1.18.39192: Flags [.], ack 1486, win 498, options [nop,nop,TS val 4290898768 ecr 2424729074,nop,nop,sack 1 {14518:15966}], length 0

16:39:41.866222 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [P.], seq 1486:7278, ack 1, win 502, options [nop,nop,TS val 2424729075 ecr 4290898768], length 5792

16:39:42.070774 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [.], seq 1486:2934, ack 1, win 502, options [nop,nop,TS val 2424729280 ecr 4290898768], length 1448

16:39:42.071778 IP 172.27.1.17.5201 > 172.27.1.18.39192: Flags [.], ack 2934, win 490, options [nop,nop,TS val 4290898974 ecr 2424729280,nop,nop,sack 1 {14518:15966}], length 0

16:39:42.072008 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [P.], seq 2934:7278, ack 1, win 502, options [nop,nop,TS val 2424729281 ecr 4290898974], length 4344

16:39:42.072042 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [.], seq 7278:8726, ack 1, win 502, options [nop,nop,TS val 2424729281 ecr 4290898974], length 1448

16:39:42.072743 IP 172.27.1.17.5201 > 172.27.1.18.39192: Flags [.], ack 2934, win 490, options [nop,nop,TS val 4290898975 ecr 2424729280,nop,nop,sack 2 {7278:8726}{14518:15966}], length 0

16:39:42.072898 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [.], seq 2934:4382, ack 1, win 502, options [nop,nop,TS val 2424729282 ecr 4290898975], length 1448

16:39:42.073582 IP 172.27.1.17.5201 > 172.27.1.18.39192: Flags [.], ack 4382, win 479, options [nop,nop,TS val 4290898975 ecr 2424729282,nop,nop,sack 2 {7278:8726}{14518:15966}], length 0

16:39:42.073776 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [P.], seq 4382:7278, ack 1, win 502, options [nop,nop,TS val 2424729283 ecr 4290898975], length 2896

16:39:42.073817 IP 172.27.1.18.39192 > 172.27.1.17.5201: Flags [P.], seq 15966:18862, ack 1, win 502, options [nop,nop,TS val 2424729283 ecr 4290898975], length 2896

The odd thing is if I repeat the capture on the OSR1CR4 interface facing the core (OSR1CR3) we see that the segments from OSR1FW2 to OSR1BR1 are indeed already missing and not being sent out towards the Core.

The weird tcpdump filter is because from OSR1CR4 towards GRE destination OSR1CR2 we use MPLS label 16110. Due to penultimate hop popping (my hands keep typing pooping by themselves :D ), the traffic on the reverse direction will arrive without a label; I need to be able to capture both directions.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

fabrizzio@OSR1CR4:~$ sh ip route 192.168.254.11

Routing entry for 192.168.254.11/32

Known via "isis", distance 115, metric 1210, best

Last update 19:23:51 ago

* 172.27.16.41, via eth0, label 16110, weight 1

root@OSR1CR4:~# tcpdump -i eth0 "(src 192.168.254.11 && dst 192.168.254.13) or (mpls 16110 && (src 192.168.254.13 && dst 192.168.254.11))" | grep 0x21f

<snipped>

16:45:49.477938 MPLS (label 16110, tc 0, [S], ttl 64) IP 192.168.254.13 > 192.168.254.11: GREv0, key=0x21f, length 82: IP 172.27.1.18.37834 > 172.27.1.17.5201: Flags [S], seq 1179634274, win 64240, options [mss 1460,sackOK,TS val 2425096687 ecr 0,nop,wscale 7], length 0

16:45:49.478705 IP 192.168.254.11 > 192.168.254.13: GREv0, key=0x21f, length 82: IP 172.27.1.17.5201 > 172.27.1.18.37834: Flags [S.], seq 3242204630, ack 1179634275, win 65160, options [mss 1460,sackOK,TS val 4291266381 ecr 2425096687,nop,wscale 7], length 0

16:45:49.478851 MPLS (label 16110, tc 0, [S], ttl 64) IP 192.168.254.13 > 192.168.254.11: GREv0, key=0x21f, length 74: IP 172.27.1.18.37834 > 172.27.1.17.5201: Flags [.], ack 1, win 502, options [nop,nop,TS val 2425096688 ecr 4291266381], length 0

16:45:49.478865 MPLS (label 16110, tc 0, [S], ttl 64) IP 192.168.254.13 > 192.168.254.11: GREv0, key=0x21f, length 111: IP 172.27.1.18.37834 > 172.27.1.17.5201: Flags [P.], seq 1:38, ack 1, win 502, options [nop,nop,TS val 2425096688 ecr 4291266381], length 37

16:45:49.479638 IP 192.168.254.11 > 192.168.254.13: GREv0, key=0x21f, length 74: IP 172.27.1.17.5201 > 172.27.1.18.37834: Flags [.], ack 38, win 509, options [nop,nop,TS val 4291266382 ecr 2425096688], length 0

16:45:49.480309 IP 192.168.254.11 > 192.168.254.13: GREv0, key=0x21f, length 75: IP 172.27.1.17.5201 > 172.27.1.18.37828: Flags [P.], seq 3:4, ack 166, win 508, options [nop,nop,TS val 4291266382 ecr 2425096686], length 1

16:45:49.480330 IP 192.168.254.11 > 192.168.254.13: GREv0, key=0x21f, length 75: IP 172.27.1.17.5201 > 172.27.1.18.37828: Flags [P.], seq 4:5, ack 166, win 508, options [nop,nop,TS val 4291266382 ecr 2425096686], length 1

16:45:49.480471 MPLS (label 16110, tc 0, [S], ttl 64) IP 192.168.254.13 > 192.168.254.11: GREv0, key=0x21f, length 74: IP 172.27.1.18.37828 > 172.27.1.17.5201: Flags [.], ack 5, win 502, options [nop,nop,TS val 2425096689 ecr 4291266379], length 0

16:45:49.480677 MPLS (label 16110, tc 0, [S], ttl 64) IP 192.168.254.13 > 192.168.254.11: GREv0, key=0x21f, length 1522: IP 172.27.1.18.37834 > 172.27.1.17.5201: Flags [.], seq 14518:15966, ack 1, win 502, options [nop,nop,TS val 2425096689 ecr 4291266382], length 1448

16:45:49.481335 IP 192.168.254.11 > 192.168.254.13: GREv0, key=0x21f, length 86: IP 172.27.1.17.5201 > 172.27.1.18.37834: Flags [.], ack 38, win 509, options [nop,nop,TS val 4291266383 ecr 2425096688,nop,nop,sack 1 {14518:15966}], length 0

16:45:49.481478 MPLS (label 16110, tc 0, [S], ttl 64) IP 192.168.254.13 > 192.168.254.11: GREv0, key=0x21f, length 1522: IP 172.27.1.18.37834 > 172.27.1.17.5201: Flags [.], seq 38:1486, ack 1, win 502, options [nop,nop,TS val 2425096690 ecr 4291266383], length 1448

16:45:49.482121 IP 192.168.254.11 > 192.168.254.13: GREv0, key=0x21f, length 86: IP 172.27.1.17.5201 > 172.27.1.18.37834: Flags [.], ack 1486, win 498, options [nop,nop,TS val 4291266384 ecr 2425096690,nop,nop,sack 1 {14518:15966}], length 0

16:45:49.686840 MPLS (label 16110, tc 0, [S], ttl 64) IP 192.168.254.13 > 192.168.254.11: GREv0, key=0x21f, length 1522: IP 172.27.1.18.37834 > 172.27.1.17.5201: Flags [.], seq 1486:2934, ack 1, win 502, options [nop,nop,TS val 2425096896 ecr 4291266384], length 1448

16:45:49.687786 IP 192.168.254.11 > 192.168.254.13: GREv0, key=0x21f, length 86: IP 172.27.1.17.5201 > 172.27.1.18.37834: Flags [.], ack 2934, win 490, options [nop,nop,TS val 4291266590 ecr 2425096896,nop,nop,sack 1 {14518:15966}], length 0

16:45:49.687950 MPLS (label 16110, tc 0, [S], ttl 64) IP 192.168.254.13 > 192.168.254.11: GREv0, key=0x21f, length 1522: IP 172.27.1.18.37834 > 172.27.1.17.5201: Flags [.], seq 2934:4382, ack 1, win 502, options [nop,nop,TS val 2425096897 ecr 4291266590], length 1448

16:45:49.688011 MPLS (label 16110, tc 0, [S], ttl 64) IP 192.168.254.13 > 192.168.254.11: GREv0, key=0x21f, length 1522: IP 172.27.1.18.37834 > 172.27.1.17.5201: Flags [.], seq 4382:5830, ack 1, win 502, options [nop,nop,TS val 2425096897 ecr 4291266590], length 1448

Troubleshooting

First of all I disabled all enabled offloads via VyOS on the core-facing interface:

1

2

3

4

5

fabrizzio@OSR1CR4:~$ configure

[edit]

fabrizzio@OSR1CR4# delete interfaces ethernet eth0 offload

[edit]

fabrizzio@OSR1CR4# commit

This did not fix the issue.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

OSR1CR4:~$ sh interfaces ethernet eth0

eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1800 qdisc mq state UP group default qlen 1000

link/ether 3a:e6:63:fc:e9:68 brd ff:ff:ff:ff:ff:ff

altname enp0s18

altname ens18

inet 172.27.16.42/30 brd 172.27.16.43 scope global eth0

valid_lft forever preferred_lft forever

inet6 2a0e:8f02:21d1:feed:0:1:11:12/126 scope global

valid_lft forever preferred_lft forever

inet6 fe80::38e6:63ff:fefc:e968/64 scope link

valid_lft forever preferred_lft forever

Description: To OSR1CR3

RX: bytes packets errors dropped overrun mcast

7400341272 9874881 0 28 0 0

TX: bytes packets errors dropped carrier collisions

1002401375 4853418 0 36577 0 0 <<<<

Ethtool shows no queue drops

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

fabrizzio@OSR1CR4:~$ ethtool -S eth0

NIC statistics:

rx_queue_0_packets: 9878837

rx_queue_0_bytes: 7405521629

rx_queue_0_drops: 0

rx_queue_0_xdp_packets: 0

rx_queue_0_xdp_tx: 0

rx_queue_0_xdp_redirects: 0

rx_queue_0_xdp_drops: 0

rx_queue_0_kicks: 2066

tx_queue_0_packets: 4853851

tx_queue_0_bytes: 1002468305

tx_queue_0_xdp_tx: 0

tx_queue_0_xdp_tx_drops: 0

tx_queue_0_kicks: 4595142

tx_queue_0_tx_timeouts: 0

fabrizzio@OSR1CR4:~$ ethtool -k eth0

Features for eth0:

rx-checksumming: on [fixed]

tx-checksumming: on

tx-checksum-ipv4: off [fixed]

tx-checksum-ip-generic: on

tx-checksum-ipv6: off [fixed]

tx-checksum-fcoe-crc: off [fixed]

tx-checksum-sctp: off [fixed]

scatter-gather: off

tx-scatter-gather: off

tx-scatter-gather-fraglist: off [fixed]

tcp-segmentation-offload: off

tx-tcp-segmentation: off

tx-tcp-ecn-segmentation: off

tx-tcp-mangleid-segmentation: off

tx-tcp6-segmentation: off

generic-segmentation-offload: off

generic-receive-offload: off

large-receive-offload: off [fixed]

rx-vlan-offload: off [fixed]

tx-vlan-offload: off [fixed]

ntuple-filters: off [fixed]

receive-hashing: off [fixed]

highdma: on [fixed]

rx-vlan-filter: on [fixed]

vlan-challenged: off [fixed]

tx-lockless: off [fixed]

netns-local: off [fixed]

tx-gso-robust: on [fixed]

tx-fcoe-segmentation: off [fixed]

tx-gre-segmentation: off [fixed]

tx-gre-csum-segmentation: off [fixed]

tx-ipxip4-segmentation: off [fixed]

tx-ipxip6-segmentation: off [fixed]

tx-udp_tnl-segmentation: off [fixed]

tx-udp_tnl-csum-segmentation: off [fixed]

tx-gso-partial: off [fixed]

tx-tunnel-remcsum-segmentation: off [fixed]

tx-sctp-segmentation: off [fixed]

tx-esp-segmentation: off [fixed]

tx-udp-segmentation: off [fixed]

tx-gso-list: off [fixed]

fcoe-mtu: off [fixed]

tx-nocache-copy: off

loopback: off [fixed]

rx-fcs: off [fixed]

rx-all: off [fixed]

tx-vlan-stag-hw-insert: off [fixed]

rx-vlan-stag-hw-parse: off [fixed]

rx-vlan-stag-filter: off [fixed]

l2-fwd-offload: off [fixed]

hw-tc-offload: off [fixed]

esp-hw-offload: off [fixed]

esp-tx-csum-hw-offload: off [fixed]

rx-udp_tunnel-port-offload: off [fixed]

tls-hw-tx-offload: off [fixed]

tls-hw-rx-offload: off [fixed]

rx-gro-hw: on

tls-hw-record: off [fixed]

rx-gro-list: off

macsec-hw-offload: off [fixed]

rx-udp-gro-forwarding: off

hsr-tag-ins-offload: off [fixed]

hsr-tag-rm-offload: off [fixed]

hsr-fwd-offload: off [fixed]

hsr-dup-offload: off [fixed]

Seeing this, I had to dig deeper and do some research. I found something related to MPLS + offloading drops on LKML and openvswitch as well as a tangentially-related blog post at Cloudflare

I decided that the best path forward would be to try and figure out if/why is the kernel dropping the packets. I added the Debian Bookworm repos onto OSR1CR2 (far end of GRE tunnel) and installed dropwatch.

I ran it with dropwatch -l kas , then configured alertmode packet set alertmode packet, saw what was normal (lots of ICMPv6 drops…) and re-ran the IPerf3 test. This is what started popping out. Protocol 0x8847 (MPLS Unicast) is a good hint that it’s the traffic I care about. Length is big, maybe due to the various offloads doing their things.

1

2

3

4

5

6

7

drop at: validate_xmit_skb+0x29c/0x320 (0xffffffff8d6b1a6c)

origin: software

timestamp: Mon Jan 1 11:52:45 2024 028417108 nsec

protocol: 0x8847

length: 3008

original length: 3008

drop reason: NOT_SPECIFIED

After digging around using Google, I found this which pointed me to offloads being the potential culprit. I had already disabled offloads on the core-facing interface that is actually dropping the packets.

I tried disabling the offloads on the interface at OSR1CR4 facing OSR1FW2. Also no change on the performance.

The last option I had was to check both the Bridge tying up everything and the tunnel interface. They have no offloads configured.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

fabrizzio@OSR1CR4# show interfaces bridge br2

description "OSR1FW2 - OSR1BR1 VLAN 543"

enable-vlan

ipv6 {

address {

no-default-link-local

}

}

member {

interface eth10 {

allowed-vlan 100

native-vlan 100

}

interface tun2 {

allowed-vlan 100

native-vlan 100

}

}

[edit]

However the tunnel interface on VyOS does have several offloads enabled by default:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

fabrizzio@OSR1CR4:~$ ethtool -k tun2

Features for tun2:

rx-checksumming: off [fixed]

tx-checksumming: on

tx-checksum-ipv4: off [fixed]

tx-checksum-ip-generic: on

tx-checksum-ipv6: off [fixed]

tx-checksum-fcoe-crc: off [fixed]

tx-checksum-sctp: off [fixed]

scatter-gather: on

tx-scatter-gather: on

tx-scatter-gather-fraglist: on

tcp-segmentation-offload: on

tx-tcp-segmentation: on

tx-tcp-ecn-segmentation: on

tx-tcp-mangleid-segmentation: on

tx-tcp6-segmentation: on

generic-segmentation-offload: on

generic-receive-offload: on

large-receive-offload: off [fixed]

rx-vlan-offload: off [fixed]

tx-vlan-offload: off [fixed]

ntuple-filters: off [fixed]

receive-hashing: off [fixed]

highdma: on

rx-vlan-filter: off [fixed]

vlan-challenged: off [fixed]

tx-lockless: on [fixed]

netns-local: off [fixed]

tx-gso-robust: off [fixed]

tx-fcoe-segmentation: off [fixed]

tx-gre-segmentation: off [fixed]

tx-gre-csum-segmentation: off [fixed]

tx-ipxip4-segmentation: off [fixed]

tx-ipxip6-segmentation: off [fixed]

tx-udp_tnl-segmentation: off [fixed]

tx-udp_tnl-csum-segmentation: off [fixed]

tx-gso-partial: off [fixed]

tx-tunnel-remcsum-segmentation: off [fixed]

tx-sctp-segmentation: on

tx-esp-segmentation: off [fixed]

tx-udp-segmentation: on

tx-gso-list: on

fcoe-mtu: off [fixed]

tx-nocache-copy: off

loopback: off [fixed]

rx-fcs: off [fixed]

rx-all: off [fixed]

tx-vlan-stag-hw-insert: off [fixed]

rx-vlan-stag-hw-parse: off [fixed]

rx-vlan-stag-filter: off [fixed]

l2-fwd-offload: off [fixed]

hw-tc-offload: off [fixed]

esp-hw-offload: off [fixed]

esp-tx-csum-hw-offload: off [fixed]

rx-udp_tunnel-port-offload: off [fixed]

tls-hw-tx-offload: off [fixed]

tls-hw-rx-offload: off [fixed]

rx-gro-hw: off [fixed]

tls-hw-record: off [fixed]

rx-gro-list: off

macsec-hw-offload: off [fixed]

rx-udp-gro-forwarding: off

hsr-tag-ins-offload: off [fixed]

hsr-tag-rm-offload: off [fixed]

hsr-fwd-offload: off [fixed]

hsr-dup-offload: off [fixed]

After disabling them one by one, the issue was solved when TCP segmentation offload (TSO) was disabled. Close to 2 Gbit/s seen now on the IPerf3 test. I was able to enable GSO & GRO again without any issues here.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

fabrizzio@OSR1CR4:~$ ethtool -K tun2 gso off

fabrizzio@OSR1CR4:~$ ethtool -K tun2 gro off

fabrizzio@OSR1CR4:~$ ethtool -K tun2 tso off

fabrizzio@OSR1FW2:~$ iperf3 -c 172.27.1.17

Connecting to host 172.27.1.17, port 5201

[ 5] local 172.27.1.18 port 57380 connected to 172.27.1.17 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 214 MBytes 1.79 Gbits/sec 370 2.14 MBytes

[ 5] 1.00-2.00 sec 206 MBytes 1.73 Gbits/sec 0 2.45 MBytes

[ 5] 2.00-3.00 sec 218 MBytes 1.82 Gbits/sec 121 1.16 MBytes

[ 5] 3.00-4.00 sec 218 MBytes 1.82 Gbits/sec 0 1.06 MBytes

[ 5] 4.00-5.00 sec 218 MBytes 1.82 Gbits/sec 0 1.23 MBytes

[ 5] 5.00-6.00 sec 214 MBytes 1.79 Gbits/sec 202 1.60 MBytes

[ 5] 6.00-7.00 sec 218 MBytes 1.82 Gbits/sec 471 2.19 MBytes

[ 5] 7.00-8.00 sec 206 MBytes 1.73 Gbits/sec 383 1.30 MBytes

[ 5] 8.00-9.00 sec 172 MBytes 1.45 Gbits/sec 0 2.62 MBytes

[ 5] 9.00-10.00 sec 194 MBytes 1.63 Gbits/sec 0 1.80 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 2.03 GBytes 1.74 Gbits/sec 1547 sender

[ 5] 0.00-10.00 sec 2.03 GBytes 1.74 Gbits/sec receiver

Now, there is no option to disable these tunnel offloads via VyOS config. So I’d have to set up a script to run on boot & upon commits to fix this automatically.

But wait, there’s more.

So far the troubleshooting has been within GRETAP tunnels that originate & terminate within my private network (that runs IS-IS + SR).

I have also noticed poor behavior on GRE tunnels that ride on top of my private network, but are not originated nor terminated within it. The symptoms were the same, TX drops on the ingress router, on the core-facing interface.

For those, the fix was much easier: Disabling GRO offload on the router ingress interface (not on the core-facing interface).

Changing GRETAP to L2TPv3

I gave this a try - even though that it implies creating a dummy interface to work around VyOS bug T1080

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

set interfaces dummy dum3 address '192.168.254.254/24'

set interfaces dummy dum3 description 'Bug T1080 Workaround'

set interfaces l2tpv3 l2tpeth10 description 'OSR1FW2 - OSR1BR1 VLAN 543'

set interfaces l2tpv3 l2tpeth10 encapsulation 'ip'

set interfaces l2tpv3 l2tpeth10 mtu '1700'

set interfaces l2tpv3 l2tpeth10 peer-session-id '543'

set interfaces l2tpv3 l2tpeth10 peer-tunnel-id '543'

set interfaces l2tpv3 l2tpeth10 remote '192.168.254.11'

set interfaces l2tpv3 l2tpeth10 session-id '543'

set interfaces l2tpv3 l2tpeth10 source-address '192.168.254.13'

set interfaces l2tpv3 l2tpeth10 tunnel-id '543'

set interfaces bridge br2 member interface l2tpeth10 allowed-vlan '100'

set interfaces bridge br2 member interface l2tpeth10 native-vlan '100'

delete interfaces bridge br2 member interface tun2

It works just fine!. Will have to do this with the rest.

Summary.

In short.

- GRETAP tunnels configured on a VyOS router which sends the traffic encapsulated with MPLS on top of GRE: Either disable TSO on the tunnel with a script, or change the tunnel to L2TPv3.

- GRE tunnels ingressing a VyOS router which then encapsulates again with MPLS: Disable GRO on the router ingress interface.